Ordinary evacuation drills have various difficulties. Many people have to gather to carry out them. It is necessary to occupy a field of training but sometimes impossible especially when the field is a public space. And such drills also have some faults. Real evacuation is usually dangerous situation but evacuation drills must be conducted safely. In the drills, people just follow a prepared behavioral plan and are not engaged in serious decision makings. We think online drills in virtual space can be a solution, because 1) people can join the drills through the Internet; 2) it is easy to prepare the field of training; 3) people can experience dangerous situation safely; and 4) we can apply gaming simulation.

Virtual space training has been already popular in training of individual tasks like driving vehicles, but not very popular in collaborative tasks like emergency evacuation. We need interactive physics simulations for individual tasks. For collaborative tasks, we need interactive social simulations in addition. Existing crowd simulations are not very interactive because they are analytic simulations. To make social simulations enough interactive, we have to build agents which can socially interact with human avatars. For this aim, we developed a virtual city space system 'FreeWalk/Q,' which is a combination of the scenario description language 'Q' and the interaction platform 'FreeWalk.'

In previous studies, some training environments where a few users and a few social agents are involved in the same simulation together have been developed. However, those systems control users and agents separately in their internal architecture. This results in a complex and inflexible system that cannot deal with many user and many agents effectively. Moreover, it is impossible to design and test simulations without incorporating human users.

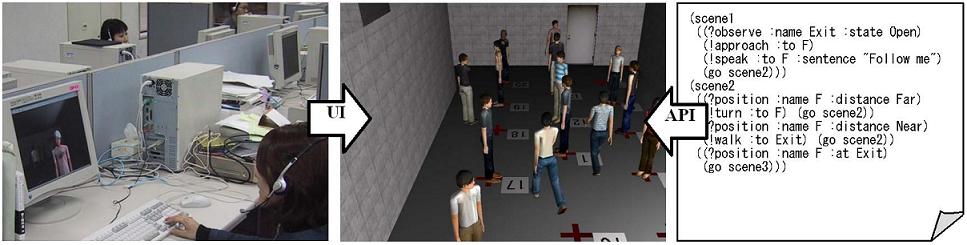

In FreeWalk, user and agents are not separated. 'Actions' including walking and speaking, and 'Cues' including looking and hearing can be executed through both of API and UI in the same way. Users manipulate their avatars through UI and agents are controlled through API. As a result, crowd simulations can be shared by user and agents (see Figure 1). We devised the following mechanisms.

Figure 1. Crowded evacuation simulations

Walking: UI accepts the direction to proceed while API accepts the destination point. This difference is eliminated by the API and the walking module generates next steps based on walking directions.

Speech: Users can talk and listen while agents generate and understand text messages. In the simulations, many users and many agents communicate with each other. Thus, our speech module establishes a voice stream between UIs, send text messages between APIs, and uses speech synthesis/recognition engines to support text-based exchanges between UIs and APIs.

Gesture: We developed a layered interface that consists of low-level UI modules and a high-level API module, which calls the UI modules.

It has been common to design internal models in order to produce intelligent behavior of agents. Our approach is to design an external model of each social agent's role in order to produce intelligent group behaviors.

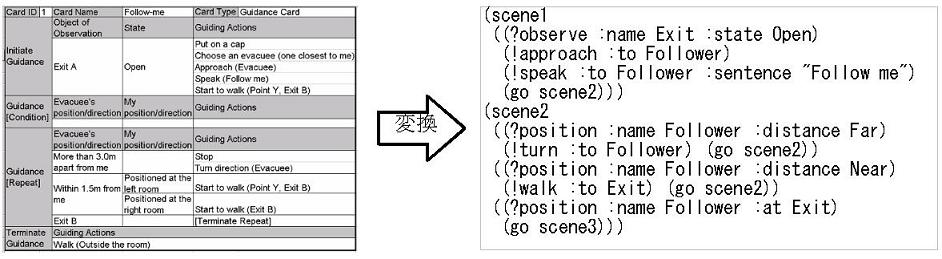

Q is a language, in which you can describe inter-agent protocols without taking agents' internal models into account. Q is modeled based on extended finite state machines, whose states correspond to scenes, whose input corresponds to perception, and whose output corresponds to actions. You can define each role as an interaction scenario, which is an accumulation of particular behavioral rules in each scene, e.g. "the agent carries out the action C when it observes the object B in the scene A." Figure 2 is an "Interaction Pattern Card (IPC)," in which an application-specific behavioral pattern and their parameters are separated. IPCs support easy and effective scenario construction.

FreeWalk agents behave according to their assigned interaction scenarios (see Figure 1). The perception commands have the prefix '?'. The action commands have the prefix '!'. When each command is interpreted by the Q language process, the corresponding API function is called. The Q process is event-driven so that multiple perception commands of each scene can be observed concurrently.

Figure 2. Interaction Pattern Card

Publications:

Hideyuki Nakanishi and Toru Ishida. FreeWalk/Q: Social Interaction Platform in Virtual Space. ACM Symposium on Virtual Reality Software and Technology (VRST2004), 2004.

Contact:

Hideyuki Nakanishi (nakanishi at i.kyoto-u.ac.jp) Dept. of Social Informatics, Kyoto University